Author’s Thoughts

"ChatGPT has quickly become a giant of the global AI industry, being the most popular AI chatbot in the world. The prospects of its usage are breathtaking, but comprehending its capabilities requires consideration of many aspects. The following blogpost demonstrates how ChatGPT can benefit businesses, and which aspects should be considered when it comes to implementation."

Yuri Svirid, PhD. — CEO Silk Data

How ChatGPT Changed the AI Landscape

Talking about the AI industry we inevitably come to the topic of ChatGPT and to the usage of ChatGPT for business purposes. This AI chatbot developed by OpenAI revolutionized the industry, starting the new era in artificial intelligence development and in human-machine interaction.

A few facts about ChatGPT:

- 400 million active users every week by February 2025 (it was only 200 million in August 2024).

- Over 2 million businesses from 156 countries integrated ChatGPT into their workflows.

- ChatGPT is used by 65% of journalists, software developers and marketers in their work.

For the past two years, this OpenAI solution has been used for content creation and translation, customer support, code writing and debugging, consulting and much more.

Considering its growing popularity, convenience and vast range of tasks it can solve, ChatGPT has become a tool enterprises wish to obtain to increase their rates and performance.

Silk Data presents a comprehensive overview of ChatGPT capabilities and perspectives of its usage for business goals.

Advent of LLMs and ChatGPT 4o

To understand the nature of ChatGPT popularity and how it can benefit businesses, it’s necessary to have a look at the large language models (which are what ChatGPT belongs to) and answer the question ‘What is ChatGPT 4o?’.

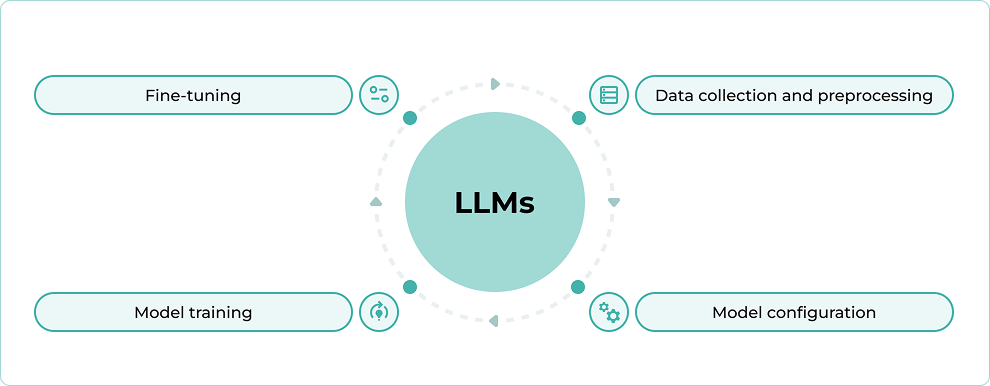

Large Language Models Fundamentals

Large language models or LLMs are advanced AI systems trained to perceive, process and generate human language. The history of LLMs spans for decades, starting from primitive chatbots working on predefined template patterns.

Traditionally, complicated LLMs are trained on massive amounts of data (from hundreds of billions to trillions of words) and demonstrate extensive capabilities of contextual understanding and semantic analysis along with high rate of responses accuracy.

This is achieved through several fundamental features, which define the principles of LLMs’ work.

For example, transformer architecture consisting of self-attention mechanism and layers allow the model to focus on the relevant parts of textual input and understand the context of the text.

The training process is the most significant part of the model development. It starts from loading the model with massive datasets (books, articles, websites, etc.) which become the basis for language’s word sequence, grammar and semantics understanding along with learning facts and patterns between them.

The model training typically ends with fine-tuning, when the additional labeled datasets are chosen, so that the model can solve specific tasks.

Modern LLMs as Foundational Models

For the past two years, LLMs have gained a number of advanced capabilities, such as providing responses to not only textual but also visual and graphical inputs, few-shot and zero-shot learning (when the model can perform unfamiliar tasks basing on general understanding of the language or on a few examples) and reasoning features.

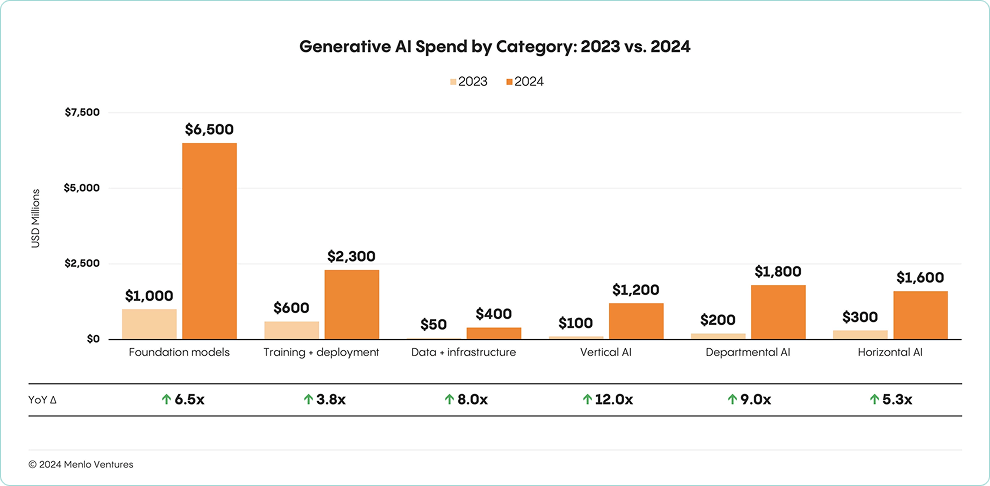

Moreover, modern LLMs have become foundational models – the type of models that can be applied across a wide range of use cases thanks to being trained on massive datasets. In addition, 2024 demonstrated rapid change in architectural approach of LLM development. The efforts shift from data preparation/training to usage of API and RAG.

Source https://menlovc.com/2024-the-state-of-generative-ai-in-the-enterprise/

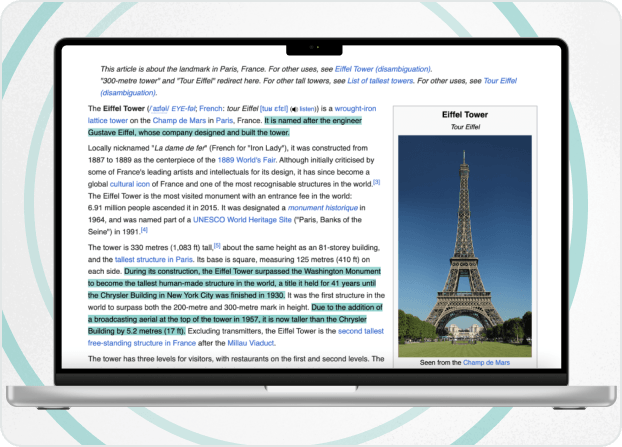

RAG or retrieval-augmented generation is a framework which reinforces the generation capabilities of LLM with external knowledge retrieval.

For instance, when the user provides a query, LLM is working on its processing, while RAG framework searches through external knowledge base (Internet or additional sources) to provide the most up-to-date information regarding the input. Then, the information is passed to the LLM as an additional context. As a result, the user gets the most accurate response.

The usage of RAG approach drastically reduces the time developers spend on model fine-tuning (though it doesn’t eliminate it entirelyfor all cases), as there’s no need to create additional training datasets which will allow the LLM to solve specific tasks.

ChatGPT 4o

ChatGPT 4o is one of the most prominent and famous of the foundational models. Presented in May 2024, it is the latest version of GPT large language models from OpenAI (though the company released GPT 4.5 version preview on February 27, 2025), where o stands for "omni" and presents a reference to the model's multiple modalities for text, vision and audio.

It is a large multimodal model (LMM) or the foundational model trained on data of different modalities (texts, video, images) that can support real-time conversations and process texts, images and audio.

OpenAI provides information on several aspects that distinguish 4o version from its predecessors:

- Ability to work with any text, audio, image and video combination as an input.

- Text, image and audio generation.

- Time of response – similar to a real human conversation.

- Sufficient improvement in dealing with non-English languages (now it supports more than 50 languages).

OpenAI declares that GPT 4o is a huge step forward to natural human-computer interaction.

Use of ChatGPT or other LLMs switches the main expenses of AI project from training and preparing the data to using the API (or serving the model).

ChatGPT for Business

After considering the essence of LLMs and ChatGPT in particular, it’s time to have a look at possible ChatGPT use cases in different industries.

Marketing

The marketing industry demonstrates the highest rates of using ChatGPT-like products with 45% of world marketers using AI in marketing processes. 71% of them rely on ChatGPT.

Though the most popular tasks are data analysis, text and images generation and content personalization, there are many non-standard use cases.

For instance, Bing developed by Microsoft uses a custom implementation of GPT-4 version to reinforce its search features.

Marketers can use Bing’s advanced search features to get the most relevant information regarding the market. In further perspective, these search results can be summarized and ChatGPT can provide specific recommendations on marketing policy or be set up for competitors monitoring.

HR and Consulting

ChatGPT provides sufficient benefits for human resources, recruitment and career consulting. The main objective is to optimize the process of candidates filtering and selection which can be achieved through quick and efficient analysis of their skills and working experience.

We developed such a solution aimed to process automated resume screening. With ChatGPT implementation in its core, the tool converts unstructured CVs into structured data, extracts and summarizes relevant information and matches it with a job description. As a result, the time of CV processing and hiring costs were cut by 50%.

For the task of career consulting, a similar solution can be used. ChatGPT implementation can help in extracting relevant information about the person’s career and provide comprehensive recommendations regarding new roles and responsibilities within the company.

Customer Support

According to Statista, about 57% of all customer support tasks are performed with ChatGPT.

The focus is to provide customers with 24/7 support availability and personalized interaction.

Implementation of ChatGPT also optimizes the customer services workflows. GPT-based chatbots can quickly provide responses to common queries, automatically track orders, refunds and returns operations or be linked to company knowledge database reducing the time spent on finding the answer.

Note! Despite the advanced capabilities of LLM-based solutions in customer services, recent surveys demonstrate common unwillingness of customers to interact with AI while solving their problems. This can be solved through the usage of AI-based avatars, able to provide human-like interaction thanks to advanced learning, mimicking and voice imitation capabilities.

Education

Education is one more industry that benefits much from using AI tools and ChatGPT in particular. Forbes survey says that about 60% of educators have been using AI for teaching in 2024, and the number is increasing.

Lesson planning, learning materials summarization and recommendations – these are just a few tasks AI-based solutions can solve.

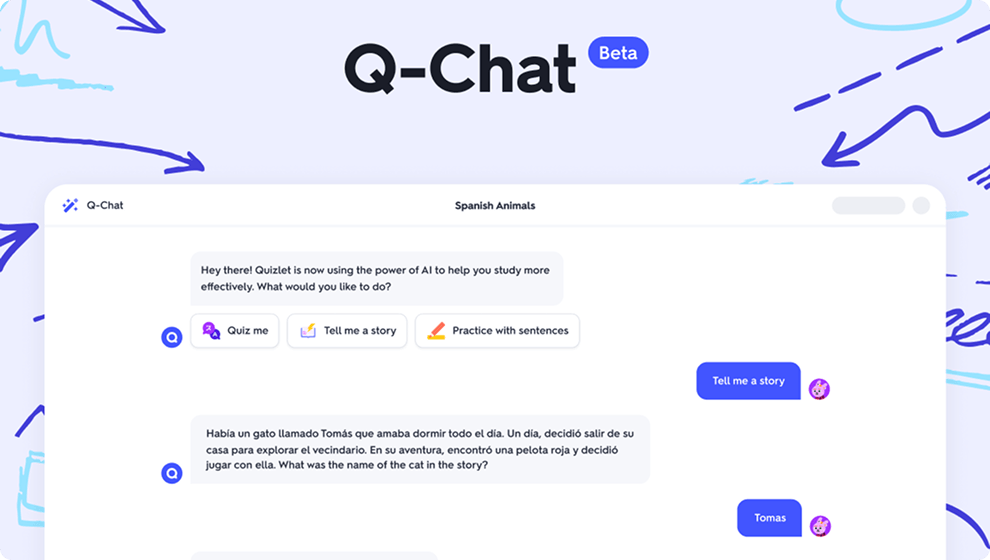

One of the most prominent representatives of LLM-based tools is Q-chat – Quizlet’s AI tutor built on combination of ChatGPT API and Quizlet’s vast content library.

Source https://quizlet.com/blog/meet-q-chat

Using the ability of advanced active learning, Q-chat can test students' knowledge through ‘question-answer’ approach, provide comprehensive guides on topics or subjects learning and recommend certain studying habits.

Note! If you want to find out more about ChatGPT and AI perspectives for studying, have a look at Silk Data’s blogpost regarding the topic of AI trends in education.

Retail

ChatGPT and other foundational large language models can be beneficial for retail industry, performing the tasks of shopping assistance and customers loyalty and satisfaction tracking.

For instance, famous brands such as H&M and Nike already use LLM-based chatbots to track orders and provide personalized shopping recommendations.

Amazon also uses an AI-based recommendation system for customers. AI is used to recommend products based on user behavior, such as browsing history, past purchases, and items in the cart. As a result, it increases average order values and customer satisfaction.

Note! You can find out more about perspectives and of ChatGPT usage and about the benefits of its application in web development from one of our blogposts.

Business Benefits of ChatGPT

Generative AI agents proved to be a promising field of AI, as many companies appear to be optimistic about using ChatGPT and its analogues in business. Through that, it is necessary to make an overview of how a combination of artificial intelligence and intelligent agents can be used in practice.

Automation

One of the key advantages of ChatGPT is that it allows to automate routine and time-consuming tasks, saving specialists’ time and companies’ resources without productivity downfall.

For instance, ChatGPT-powered tools can provide 24/7 service support, generate content templates, extract data and summarize huge documents, saving hours of employees’ working time.

Workflow Optimization

ChatGPT is also good at optimizing working processes at different stages in various business departments.

ChatGPT-based tool can prioritize tasks within a project, set deadlines and schedule assistance. It can optimize the work with corporate knowledge, organizing documents and other vital data or create email drafts to speed up communication between departments.

Thinking about workflow optimization through AI usage? Contact us and share your needs.

Wide Range of Tasks

ChatGPT’s capabilities of advanced learning and scalability male it helpful in dealing with various tasks.

Customer support, content or code creation and creative idea generation, training, consulting and personalized recommendations – all these tasks can be solved through the usage of OpenAI product (if it’s properly implemented, set and fine-tuned).

Researches made on ChatGPT business implementation demonstrate the positive impact of the chatbot.

For customer service, the response time for customer queries was reduced by 60% while the HR industry reports that the time of hiring process was reduced by 50%. The higher rates appear in content and digital marketing, as ChatGPT speeds up the process of SEO-optimized content generation fivefold.

ChatGPT Integration vs Custom LLM Development

From both business and development points of view, ChatGPT implementation has one undeniable advantage – one can deliver a solution or a feature of a product very fast.

ChatGPT (and foundational LLMs similar to it) is trained on massive datasets enabling it to solve a vast range of tasks. This feature relieves developers from the time-consuming task of initial dataset collecting and labelling. In essence, the model training process can be reduced to the creation of a small testing dataset which dramatically reduces the costs of model development.

However, the reliance on a certain LLM API provider makes the product dependent on this provider. Latency and outages, being the weak spot of ChatGPT (the last significant accident happened in December 2024), can negatively affect the productivity of company’s LLM-based solution, while the operational costs increase as well.

At the same time, applying to the option of independent task-specific LLM development can be far more time-consuming at the development stage, but such a fully customized solution provides fewer problems in future.

Considerations on ChatGPT Integration

Accuracy

ChatGPT demonstrates significant progress and permanent improvements in its response's accuracy.

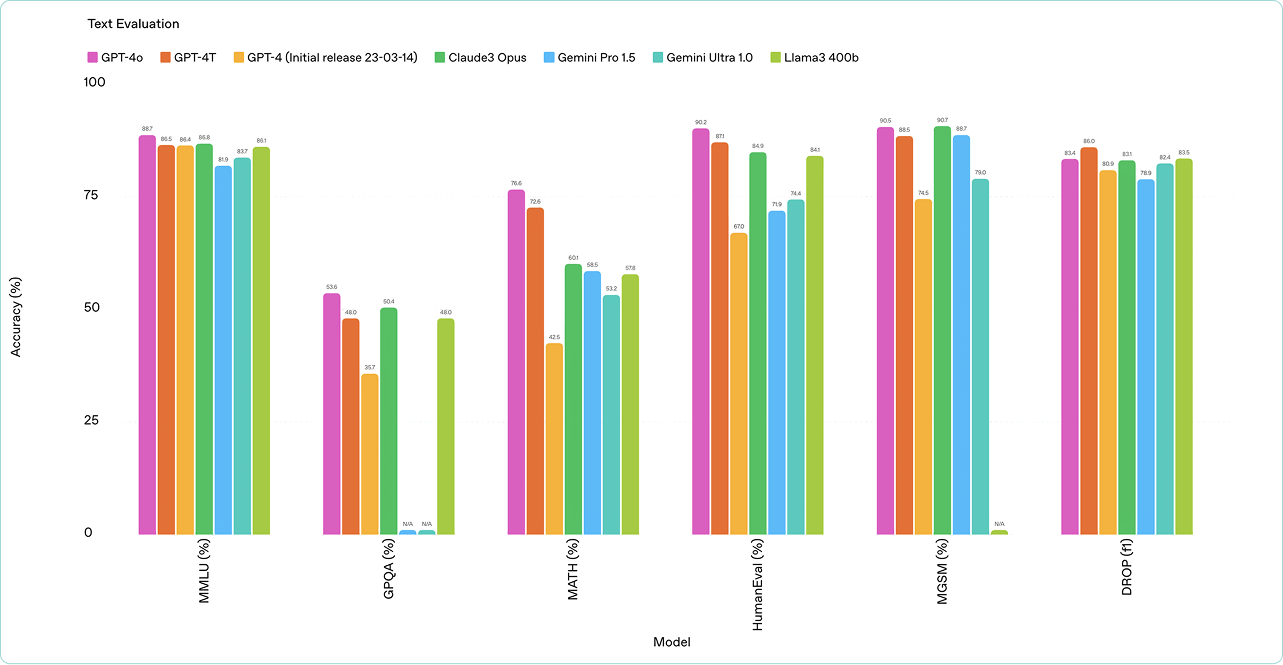

OpenAI provides a comprehensive statistic of latest GPT 4o model capabilities in solving complicated tasks, comparing it to other foundational LLMs developed by Google and Anthropic.

Source https://openai.com/index/hello-gpt-4o/

However, the reality shows that business still can’t fully delegate task processing to a machine. Additional monitoring and review are still required to avoid inaccurate or totally wrong (=harmful) results.

Monopoly

While ChatGPT API offers powerful advantages mostly related to the cost optimization of model development, monopoly risks should be considered.

As we mentioned, relying on OpenAI provider makes the solution dependent on it. Companies can face the necessity of reconsidering their strategy because of updated OpenAI operational prices, long latency and outage sessions. Moreover, such a dependency leads to certain ethical and security concerns regarding OpenAI’s data privacy and management policy.

One of the best solutions to avoid these problems is to apply to open-source models, for example, Llama, which appear to be a good alternative to ChatGPT with more freedom.

One of Silk Data’s projects is based on the following approach, as we found an optimal balance of cost and performance, deciding to transition private LLM model to Hetzner server. As a result, the client company received a fully operational private LLM-based chatbot within a month.

Want to launch your own private LLM? Let’s discuss the details!

Regular Updates

Though regular updates of ChatGPT API can bring a number of benefits, such as improved performance or fixes of bugs, there’s also several problems it could cause.

For example, unexpected changes can lead to shifts in model behavior and affect accuracy and time of responses. At the same time, tools relying on ChatGPT API may require additional updates to remain compatible with the new version. Altogether, these factors may lead to additional costs and downfalls in model productivity.

Privacy and Security Issues

Privacy and security issues remain critical when using any LLM API. Businesses must consider potential risks regarding sensitive data and specific regulations.

In practical use, when the user sends an input to ChatGPT-based tool, the content of the input transferred to OpenAI servers, which can be dangerous while dealing with sensitive data.

At the same time, attacks made on OpenAI’s systems can also affect Chat GPT-based tools, putting the corporate data in danger.

Proper mitigation strategies can reduce the risks to a minimum. Data anonymization, encryption and API access control are the ways of preventing data breach and leaking.

Conclusions

ChatGPT has undeniably revolutionized the AI landscape, becoming a flagship of modern business innovation. Its ability to automate tasks, optimize workflows, and handle a wide range of functions has made it an indispensable tool across industries, from marketing and customer support to education and retail.

However, as with any powerful technology, the integration of ChatGPT comes with its own set of challenges. While the model offers significant advantages, reliance on a single provider like OpenAI can be risky, implying dependency on API availability, potential cost increases, and ethical considerations regarding data usage along with various security concerns.

Greater customization and control require the usage of hybrid approach, when ChatGPT is combined with custom solutions and open LLMs, along with various risk mitigation strategies.

In essence, the best policy regarding the usage of any ChatGPT-like solution in business is to find and follow balance between AI and human efforts.

Our Solutions

We work in various directions, providing a vast range of IT and AI services. Moreover, working on any task, we’re able to provide you with products of different complexity and elaboration, including proof of concept, minimum viable product, or full product development.