Author’s Thoughts

"Strategic model retirement is key to maximizing the long-term value of your chatbot by ensuring it remains efficient, accurate, and secure. Proactively managing transitions and refining your models ensures continuous improvement, better user satisfaction, and a competitive edge."

Yuri Svirid, PhD. — CEO Silk Data

Model Retirement for Chatbots

Large Language Models (LLMs) have fundamentally transformed chatbot development, providing advanced natural language understanding and generation capabilities. However, effectively deploying an LLM-powered chatbot involves more than just initial setup; it requires ongoing refinement throughout the model lifecycle. This ensures continuous accuracy, relevance, and technical performance in dynamic environments.

Model Lifecycle Overview

Each chatbot leveraging an LLM experiences several key stages:

- Deployment

Integration and launch of a pretrained LLM.

- Monitoring

Continuous evaluation of chatbot performance in live scenarios.

- Maintenance and Refinement

Regular updates, optimizations, and fine-tuning.

- Retirement

Phasing out obsolete models for updated versions.

Detailed Steps for LLM Refinement

- 1

Performance Monitoring

Effective refinement relies heavily on systematic monitoring. This includes analyzing logs of user interactions, response latency, accuracy metrics (such as BLEU, ROUGE, precision, recall), and user satisfaction scores. Data analytics tools and custom monitoring dashboards facilitate identifying trends and issues proactively. - 2

Addressing Model Degradation

Model degradation occurs when chatbot performance gradually declines due to factors like data drift or domain shifts. Indicators of degradation include increased latency, declining accuracy, or growing user dissatisfaction. Detecting degradation early through analytics allows timely interventions such as retraining or fine-tuning. - 3

Ensuring Model Compatibility

Compatibility checks are critical before implementing model updates. Compatibility validation involves integration testing, environment checks, and scenario-based tests to ensure new LLM versions maintain stability and compatibility with existing systems and APIs. - 4

Model Updates and Fine-Tuning

Refinement strategies involve retraining the LLM with updated or additional data to enhance accuracy. Fine-tuning targets specific conversational contexts or domains, optimizing responses to particular user queries or scenarios. Implementing incremental updates frequently ensures continuous model improvement without extensive resource usage. - 5

Version Control and Management

Robust version control systems like Git, alongside dedicated model registries, manage different model iterations clearly. This simplifies collaborative tracking of updates, configurations, and performance histories. Accurate versioning helps manage rollbacks and comparisons across model updates. - 6

Validation and Testing

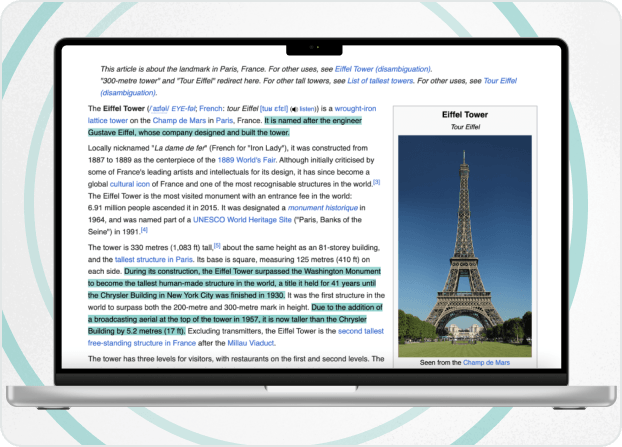

Validation ensures the reliability of refined models through rigorous testing methodologies. Key validation techniques include:- A/B Testing

Comparing user responses between new and existing models.

- Regression Testing

Automated tests to ensure updates don't negatively impact existing functionality.

- Shadow Deployments

Running new models alongside older versions, assessing live performance and user feedback before full transition.

- A/B Testing

What Is RAG?

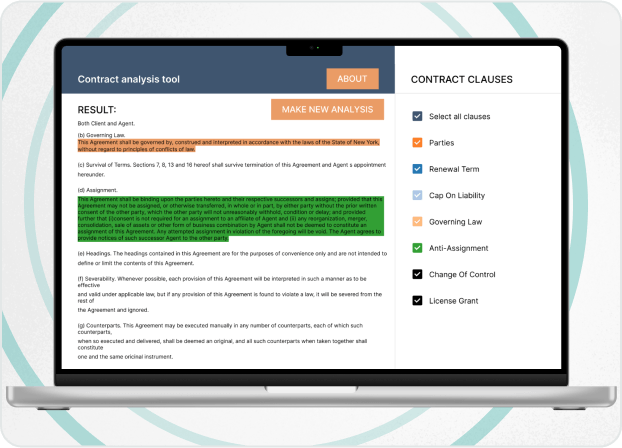

Retrieval-Augmented Generation (RAG) systems have become increasingly popular, particularly for creating chatbots that can effectively respond to user queries by accessing a corporate knowledge base.

The core RAG process consists of two primary components: retrieval, where relevant documents are extracted from a knowledge base, and generation, where these documents are analyzed by an LLM to create comprehensive answers.

Evaluating RAG systems involves assessing them end-to-end and at a granular level, focusing on aspects such as data quality, system performance, response relevance, and security.

The quality of a RAG system significantly depends on the underlying data. It's crucial to ensure documents are accurate, comprehensive, and regularly updated. Proper chunking (breaking data into manageable pieces) and embedding generation (transforming data into searchable vector representations) directly impact retrieval accuracy. Employing tools like cosine similarity for duplicate detection, readability scores, and semantic validation can help maintain data quality.

Ultimately, iterative improvements driven by detailed evaluations ensure a highly effective RAG-based chatbot.

Retrieval-Augmented Generation (RAG) systems are increasingly adopted in corporate environments to enhance chatbot performance by integrating retrieval mechanisms with Large Language Models (LLMs). Evaluating the effectiveness of these systems is crucial for ensuring accurate and reliable responses. Insights from recent articles provide valuable guidance on this topic.

Key Components of RAG Evaluation

- 1

Data Quality

The foundation of any RAG system lies in its knowledge base. Ensuring the accuracy, completeness, and relevance of documents is paramount. Techniques such as chunking (dividing documents into manageable pieces) and generating precise embeddings (vector representations) are essential. Regular assessments for duplicates using cosine similarity and evaluating readability scores can maintain data integrity. - 2

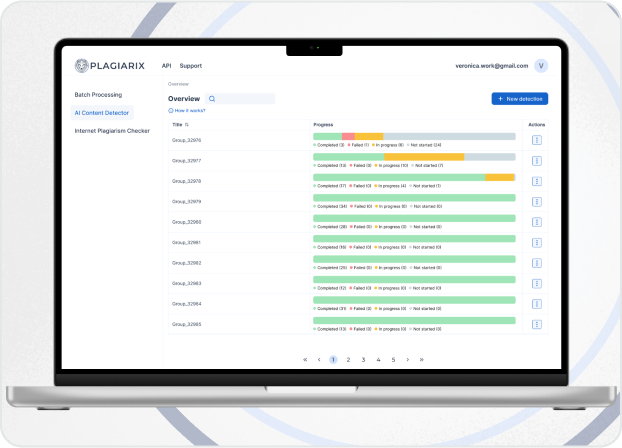

System Performance

Monitoring response times, system uptime, and resource utilization is vital. Implementing dashboards with tools like Grafana and Prometheus facilitates real-time tracking of these metrics, ensuring the system operates efficiently. - 3

Response Relevance

Evaluating the pertinence of chatbot responses involves both automated metrics and human judgment. Metrics such as BLEU and ROUGE scores offer quantitative insights, while human reviewers can assess the contextual appropriateness of responses - 4

Security and Robustness

It's crucial to test LLMs for vulnerabilities, including susceptibility to adversarial prompts or potential data leaks. Utilizing frameworks like Garak, Giskard, and PyRIT can help identify and mitigate these risks, ensuring the system's resilience against malicious inputs.

Challenges in RAG Evaluation:

Metric Selection

Choosing appropriate evaluation metrics is complex. While automated metrics provide objective data, they may not fully capture the nuances of human language, necessitating a combination of both automated and manual evaluations.

Continuous Monitoring

LLMs can exhibit unpredictable behaviors over time. Implementing continuous monitoring mechanisms is essential to promptly detect and address issues, maintaining system reliability.

In summary, a comprehensive evaluation of RAG-based chatbot solutions encompasses assessing data quality, system performance, response relevance, and security measures. Employing a blend of automated tools and human oversight ensures that these systems deliver accurate, efficient, and secure responses, aligning with organizational objectives.

Final words

Managing your chatbot through the entire model lifecycle, including strategic retirement, is a must. Model retirement isn't just about discarding outdated technology; it's a proactive approach to ensuring continuous chatbot efficiency, accuracy, and security. By carefully planning model transitions, maintaining rigorous validation practices, and leveraging Retrieval-Augmented Generation (RAG) for enhanced accuracy, you set the foundation for a robust, reliable chatbot solution.

Our Solutions

We work in various directions, providing a vast range of IT and AI services. Moreover, working on any task, we’re able to provide you with products of different complexity and elaboration, including proof of concept, minimum viable product, or full product development.